以下是kimi对上面图片的分析

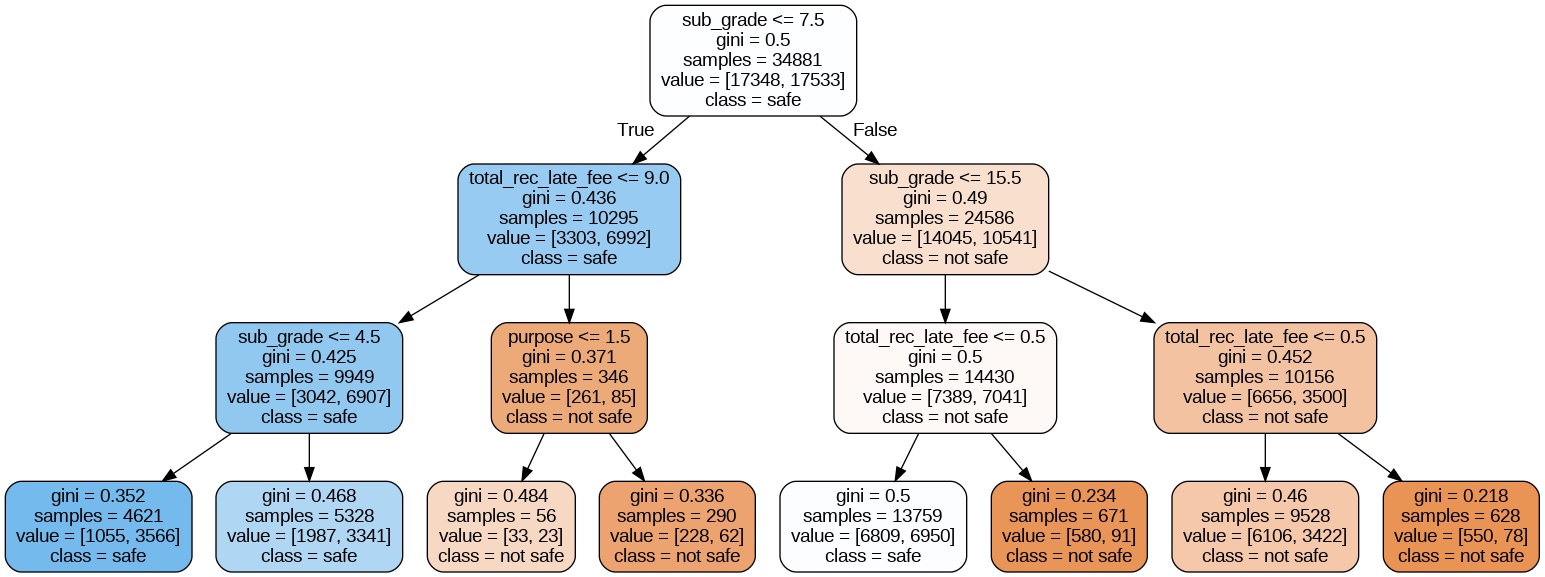

您提供的文件内容似乎是决策树算法生成的一个决策树的文本表示。这个决策树用于分类任务,其中每个节点都是一个决策点,每个叶节点代表一个分类结果。以下是对文件内容的解释:

-

根节点:决策树从根节点开始,根节点的决策条件是

sub_grade <= 7.5,表示子等级(可能是贷款风险等级)小于或等于7.5。这个节点的基尼不纯度指数(Gini Index)是0.5,表示在该节点下,数据集是完全随机的,即正负样本各占一半。 -

左子树:如果

sub_grade <= 7.5为真,那么进入左子树。左子树的第一个决策点是total_rec_late_fee <= 9.0,表示最近记录的逾期费用小于或等于9.0。这个节点的Gini指数是0.436,表示比根节点稍微纯净一些。 -

右子树:如果

sub_grade <= 7.5为假,那么进入右子树。右子树的第一个决策点是sub_grade <= 15.5,表示子等级小于或等于15.5。这个节点的Gini指数是0.49,表示这个分支的数据集比左子树的稍微不纯净一些。 -

进一步的分支:每个子节点都有可能继续分支,直到满足停止条件。例如,左子树的某个分支进一步根据

purpose <= 1.5进行决策,而右子树的分支可能根据total_rec_late_fee <= 0.5进行决策。 -

叶节点:最终,每个分支都会到达一个叶节点,叶节点给出了一个分类结果,例如

class = safe或class = not safe。这些叶节点的Gini指数通常较低,表示在这些节点下的数据集分类非常明确。 -

样本数量和分类值:每个节点都包含了样本数量(

samples)和分类值(value),分类值是一个二维数组,第一个数字代表正类(例如“safe”),第二个数字代表负类(例如“not safe”)。 -

Gini指数:Gini指数是一个衡量数据集纯度的指标,值越低表示数据集越纯净。在决策树中,我们希望在每个节点上通过特征选择来最大化信息增益,从而降低Gini指数。

这个决策树可能是用于信用评分或风险评估的,其中 sub_grade 可能代表贷款的子等级,total_rec_late_fee 可能代表总的最近记录的逾期费用,purpose 可能代表贷款目的。最终的分类结果 class 可能表示贷款是否被认为是安全的(safe)或不安全的(not safe)。

下面是chatgpt的解释

The image you’ve uploaded depicts a decision tree, which is used to model decisions and their possible consequences, in this case categorizing something as "safe" or "not safe" based on various features. Let’s break down this specific tree step-by-step:

Root Node

- Feature:

total_grade <= 7.5 - Gini: 0.5

- Samples: 34881

- Class Value: [17348, 17533]

- Decision: This is the initial decision point. If the

total_gradeis less than or equal to 7.5, we follow the left branch. Otherwise, we follow the right branch. The class distribution nearly equally split between safe and not safe, indicating initial uncertainty (Gini = 0.5 shows maximum impurity).

First Level

Left Branch

- Feature:

grade <= 0.5 - Gini: 0.436

- Samples: 10295

- Class Value: [3303, 6992]

- Decision: In this branch, we evaluate another feature,

grade. Ifgradeis less than or equal to 0.5, we move further left; otherwise, further right. The Gini coefficient has decreased, showing a decrease in impurity from the root.

Right Branch

- Feature:

sub_grade <= 15.5 - Gini: 0.49

- Samples: 24586

- Class Value: [14045, 10541]

- Decision: On this branch, the decision is based on

sub_grade. A lower Gini than the root node, but still high, showing substantial impurity.

Further Branching

Let’s detail one more depth level:

Further Left on the First Left Branch (following grade <= 0.5)

- Left Sub-branch:

-

Feature: None (Leaf Node)

-

Gini: 0.352

-

Samples: 4621

-

Class Value: [1055, 3566]

-

Class: safe

-

Right Sub-branch:

-

Feature:

purpose <= 1.5 -

Gini: 0.371

-

Samples: 346

-

Class Value: [261, 85]

-

Class: not safe

-

These nodes represent further decisions and classifications based on additional splits.

Observations

- Path to Decision: Each path from the root to a leaf node represents a set of conditions that lead to a final classification (either "safe" or "not safe").

- Gini Impurity: A Gini index of 0 indicates perfect purity (all samples in a node fall into a single category). Nodes are created by selecting splits that decrease the Gini impurity among child nodes compared to their parent node.

- Class Labels: These show the decision at each node, based on the majority of samples’ classification if no further split can achieve better purity or other stopping criteria have been met (like maximum depth or minimum sample size at a node).

This decision tree helps in making predictions by tracing through the tree with the values of the features in the dataset, following branches according to these values until a leaf node is reached, which gives the prediction.