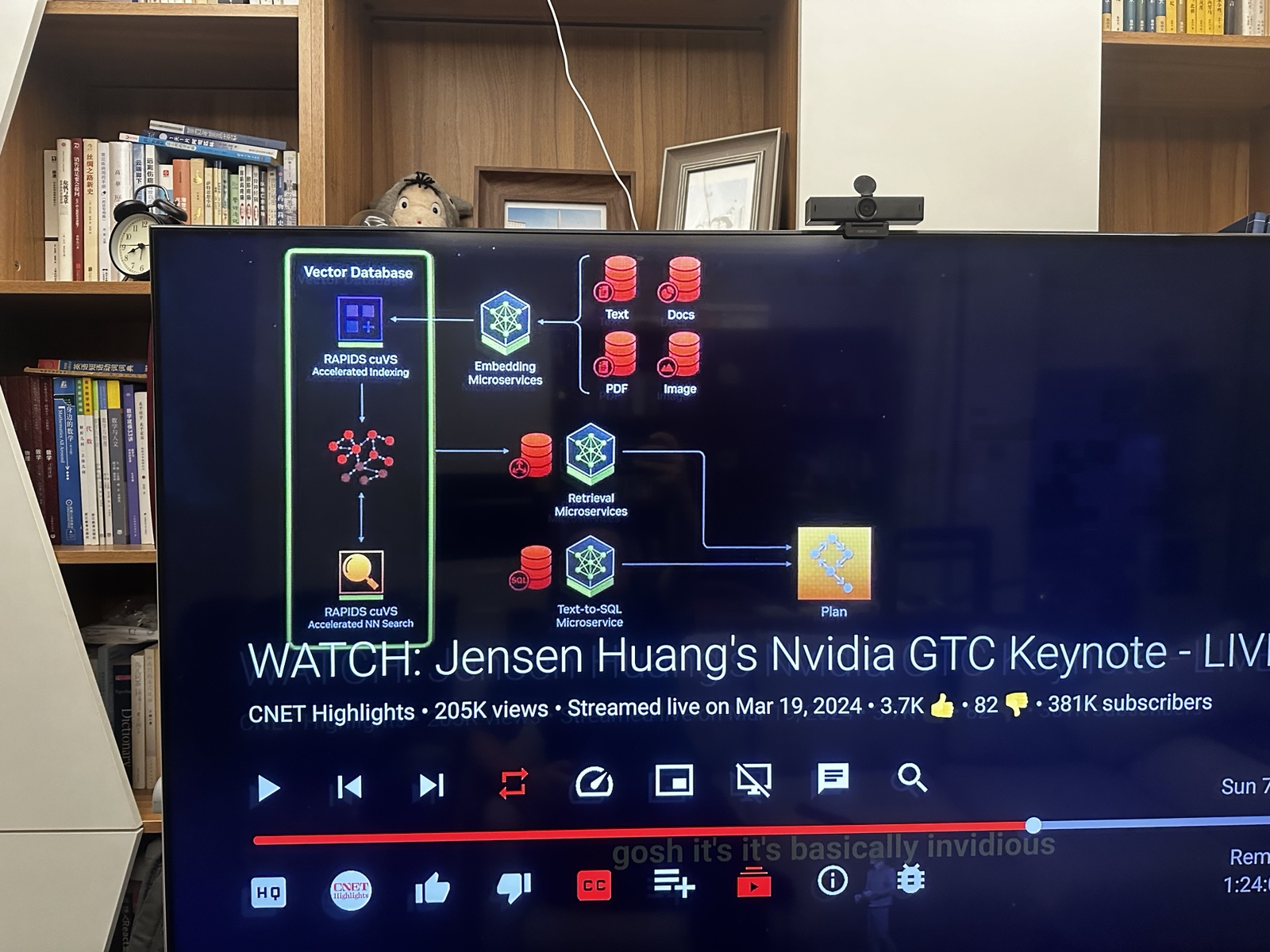

为了了解nim意欲何为,我从下面几篇文章里摘录了感兴趣的观点,首先是我在gtc线上会议拍摄的照片

英伟达利用nim加速生成式AI

Nvidia Looks to Accelerate GenAI Adoption with NIM

kimi的答复:

如果您只是在单机或者边缘设备上使用Nvidia Inference Microservice(NIM),您可能不需要Helm。Helm是一个Kubernetes的包管理工具,它主要用于自动化Kubernetes环境中的应用程序部署。当您在单机或边缘设备上使用NIM时,您的环境可能不依赖于Kubernetes,因此也就不需要Helm来进行部署和管理。

在这种情况下,您可以直接部署NIM作为一个容器化的微服务,或者将其集成到您现有的基础设施中。NIM的设计允许它灵活地适应不同的部署场景,包括但不限于Kubernetes集群、云环境、虚拟机或物理机。这样,无论您是在数据中心、私有云还是边缘计算环境中,都可以利用NIM来加速AI推理任务。

此外,NIM的容器化特性意味着它可以轻松地在不同的环境中迁移和运行,而不需要对底层基础设施进行大量的修改。这种灵活性使得NIM成为在多种计算环境中部署AI模型的理想选择。

总之,如果您的使用场景不涉及Kubernetes集群,那么您不需要Helm。您可以直接部署和运行NIM,或者将其集成到您的单机或边缘设备环境中,以实现高效的AI推理。

从“推理服务”到“推理微服务”

- Nvidia Inference Microservices (NIM),

which packages optimized inference engines, industry-standard APIs and support for AI models into containers for easy deployment。While NIM provides prebuilt models, it also allows organizations to bring their own proprietary data and will support and help to accelerate Retrieval Augmented Generation (RAG) deployment.

What exactly is Nvidia NIM?

At the most basic level, a NIM is a container full of microservices.

The container can include any type of model, ranging from open to proprietary models, that can run anywhere there is an Nvidia GPU — be that in the cloud, or even just in a laptop. In turn, that container can be deployed anywhere a container can run,

* which could be a Kubernetes deployment in the cloud,

* a Linux server or

* even a serverless Function-as-a-Service model. Nvidia will have the serverless function approach on its new ai.nvidia.com website, where developers can go to begin working with NIM prior to deployment.

To be clear, a NIM isn’t a replacement for any prior approach to model delivery from Nvidia. It’s a container that includes a highly optimized model for Nvidia GPUs along with the necessary technologies to improve inference.

- 更好支持RAG

The RAG approach will benefit from the integration of NVIDIA NeMo Retriever microservices inside of NIM deployments. NeMo Retriever is a technology that Nvidia announced in November 2023 as an approach to help enable RAG with an optimized approach for data retrieval.

除了langchain,llamaindex,现在又冒出一家应用框架公司deepset

英伟达nim网站

https://www.nvidia.com/en-us/ai/